In the post Fear & Loathing in the Ad Technology Stack (3/8/11), TOTSB opined about the latent dangers of having a tag management platform provided by the same vendor as the site analytics solution. Since then, IBM CoreMetrics joined the fray with their Digital Data Exchange solution. Earlier this week, the other and much bigger shoe dropped as Google announced their new and free Tag Manager.

With this latest development, it seemed like a good time to take a look at digital marketers often foolish handling of their customer’s behavioral data. These days such foolishness is like leaving the safe open with money in plain view. Now, let’s take a closer look at what is being offered by Google.

How Does It Work?

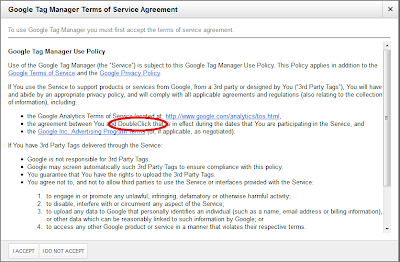

The appeal of Google Tag Manager is understandable: “Google Tag Manager is easy, free, and reliable. It gives marketers greater flexibility, and it lets webmasters relax and focus on other important tasks.” Signing-up is easy enough and takes just a few minutes like many other Google tools. Digital marketers can even “opt-out” of anonymously sharing their data for benchmarking purposes. However, this is a faux bone being thrown out by Google that is revealed on a subsequent screen.

Later, users learn that are actually agreeing to share their data with DoubleClick, Google’s advertising business and signing-up for AdWords, too. It is odd that users must explicitly agree to this to use a Tag Management System. On the final screen you can add then add some 3rd party tags. Conveniently this screen is pre-populated with Google’s Ad Words, DoubleClick Floodlight and Google Analytics tags. Supposedly other tracking tags will be coming soon with such drag-and-drop simplicity. Until then you can add custom code.

Google Tag Manager is:

- Asynchronous itself and calls 3rd party tags asynchronously which means that slow-loading tags (including itself) won’t slow down page download time.

- Not server-server…at least that is not yet clear. Meaning tags are literally firing on all requests which is technically a worse engineered solution when simultaneously using other Google products and services. When GTM does go S2S, certainly it will be positioned as a speed benefit…just ignore the looming centralized consolidated Google master cookie.

- Using a Data Layer. Handy, as it means that there is a way to manage standardized data elements from user behavior on a page or other integrated systems.

- No SLA. That is what free means; as a result this makes GTM less appropriate for enterprise-sized clients. Perhaps this will be included in Google Analytics Premium.

The Trojan Horse

Now for the rub. Considering the success of Google’s model of free analytics, this move by Google should not be a big surprise. If you weren’t already sharing your data with the Google data-mining machine, now there is one more way for them to get even more breadth of data capture.

Combined with their the search, free email, social and display media business, Google continues to steadily touch more and more of the entire digital stack. That means they also have maximum user depth, i.e. the full end-to-end view of cause and effect. It is this rich, vast global data set that Google’s engineers have trained their sights on analyzing. The reality is that most digital marketer’s already aren’t technically savvy enough to realize the free Google stack is a digital data Trojan Horse much less do anything about it. When you are used to getting the milk for free why would you want to pay for the cow? Let’s face it – it is a brilliant strategy.

Even if digital marketers decide to forgo Google Analytics and upgrade to a pure-play enterprise analytics solution (not a fake one like Google Analytics Premium), they still have a hole in the data bucket…now thanks to Google Tag Manager. Let’s just call it Google’s little data collection hedge.

At the same time, for most Tag Management System vendors this is going to be a really big problem. Google will now commence to eat many TMS’ lunch by putting tremendous price pressure on the market..kind of like dumping. Many digital marketers have already invested in what we can refer to as TMS 1.0 where its all about putting tags in containers albeit through non-server-to-server solutions. Interestingly, many of them are using their paid TMS to deliver their free Google Analytics. Arguably, these clients are the most at risk to Google’s freebies.

Think about it: these TMS 1.0 providers cannot compete any time soon with what will soon be a cloud-based (S2S) architecture. It will be difficult, expensive and risky to change their platforms with many clients and very tedious implementations already behind them. Expect to see more consolidation as a result.

The High Cost of “Free“

Most digital marketers have been blissfully unaware of the actual game that they have been playing with Google for years – all under the auspices of free and easy-to-use. Perpetuated by self-appointed experts, there is a popular notion that espouses that analytics technology should be cheap and that it is more valuable to have a well-funded well-paid analytics people…not an expensive tool. The above meme is so Google. It is self-serving and self-reinforcing; it works especially well for the cottage industry of certified implementers and analysts. Unfortunately, it usually also means weak display media measurement, gaping holes in data security/intellectual property control and potentially deep privacy concerns. More tangibly, it could also mean inadvertently feeding your competition through a de facto data co-op while Google makes a buck.

The layers of Google’s conflicts of interest are deep and include:

- Google Remarketing – conveniently baked into Google Analytics these days; the Google advertising cookie and the Google Analytics site cookie have been one and the same for some time now

- Google Analytics – known to overstates Paid Search performance

- Google Search – recently changed how referral data is passed on landing pages, thus obfuscating search performance

- Google Analytics Premium – a thrown bone on fractional attribution and now via DoubleClick Analytics, yet their credibility as an independent arbiter of their own performance is rarely considered

On Being Ethically Challenged About Others’ Intellectual Property

Google’s history is riddled with questionable attitudes towards ownership of other’s data. If your IP attorneys are not paying attention to this – you might need new ones:

Digital Marketer’s Fasutian Bargain

The fact of the matter is that Google is really an advertising company not a technology company. The big question for today’s digital marketers that are considering Tag Manager has not changed. It is the same as the Google Analytics question, i.e. is your company’s most valuable asset (customer’s behavioral data) worth more than the cost of not sharing it with the best data-mining conglomerate in the world? For many smaller companies the answer could be no, but for many largeradvertisers the answer should be – thanks, but no thanks.

Google’s latest self-serving, 3-for-me and 1-for-you offering should really motivate digital marketers to start to think differently about their value of their data, how much they trust others with it and what they need to do next to securely and exclusively control their data. Smart advertisers need to really start paying attention to how much data they are really sharing with a company that Sir Martin Sorrel best referred to as a “frenemy“…and that was way back in 2007. So much for do no evil.

The good news is that it doesn’t have to be this way.

How to Remove Google from your Ad Stack

Others are also noticing Google’s move and that digital marketers do have other alternatives…they are just not free. Back to using common-sense and ROI/TCO analysis to justify technology investments…or risk sharing your data with Google and the competition.

Here are some thought-starters:

- Tag Management. Best choice at this point: BrightTag. Yes, I am an advisor. However, the reason I am is because only BrightTag has looked beyond tags on pages to the underlying problem of the data transport layer. Unlike the other TMS 1.0 platforms, BT has already a few years into developing a powerful TMS 2.0 tool; it is based on a highly scalable cloud-based infrastructure that offers digital marketer’s a real alternative to Google’s encroaching data glom. The good news is that most everyone that matters is already server-server integrated with BT…except of course (wait for it…)…Google’s products (Google Analytics, Floodlights, AdWords).

- Analytics: Adobe, ComScore’s Digital Analytix and if you must IBM CoreMetrics

- Ad Server: MediaMind, Pointroll, MediaPlex and if you must Atlas……not Google Analytics

- Search Management: Kenshoo, Adobe, Marin…anything but DART Search

- Attribution: Adometry, Visual IQ have better methodologies…C3, Convertro, Clearsaling…not Google Analytics or DFA.

- Demand Side Platform: MediaMath, Turn, DataXu…not Bid Manager (formerly Invite Media).

The truth of the matter should be getting clearer to savvy digital marketers. If not, bring in independent viewpoints that are not invested in this madness. Good luck!

For publishers this is a much more complex proposition and the subject of a future post.